Disclaimer: I am not perfect and neither are my notes. If you notice anything that requires clarification or correction, please email me at melanie (dot) marttila (at) gmail (dot) com and I will fix things post-hasty.

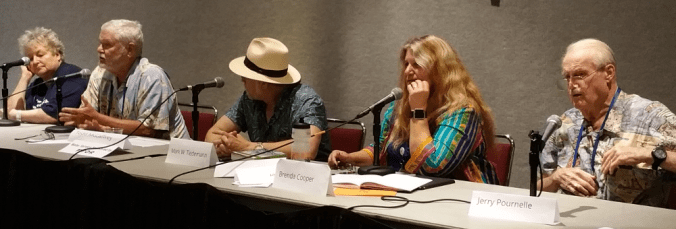

Panellists: Pat Cadigan, Gregory Benford, Mark W. Tiedemann, Brenda Cooper (moderator), Jerry Pournelle

Joined in progress …

GB: We can work out the engineering problems. The people problems, we can’t.

JP: We have to have some form of artificial gravity. Currently, interstellar travel can only be accomplished by accelerating half way and then decelerating the other half. The Fermi paradox says there might be one civilization, not planet, not planet with some form of life, but one civilization, per galaxy.

PC: People choose to live in habitats orbiting Earth. They don’t have artificial gravity. The solution could be epigenetics. Adapt the body to life in space. Once you pass a few generations, the privations become irrelevant. Then we have to face the challenges of exploration and colonization of new worlds. We’ve faced some of these problems before. The prairie skies produced agoraphobia. When the generation ships land, people will be totally freaked. We’ll need to regulate space and noise.

BC: There was a 100 year starship symposium at which it was posited that generation ships would have to have a military-like social structure.

MWT: I don’t see why we’d want to do that. It would work, but not without the benefits that make such a system worth it.

GB: That might be the wrong analog. If you have a pool, you need a lifeguard. The army has a purpose in the larger community. A genration ship is a community.

JP: The Melanesians who settled Hawaii knew they were going on a one way trip. A worker who works, lives, and never leaves Manhattan might as well be on a colony.

PC: If we have habitations around Saturn, it’s too far away for help to get there in the case on an emergency. It would have to be a regimented society. They would have to constantly be checking their equations, their plans. They would never want to be doing something for the first time.

MWT: The personalities of the volunteers will influence what happens on the ship, and in the colony.

BC: What would people on the ship do for fun?

GB: What does anyone do? Sex, drugs, and rock ‘n’ roll.

PC: Even the frivolous pursuits would have to be engineered.

MWT: I think virtual reality would be a major component.

BC: How can you teach generation after generation order and discipline and then expect innovation and creativity to emerge at the destination?

JP: That’s what novelists are for.

And that was time.

Next week: The dark side of fairy tales 🙂

Thanks for stopping by. Hope you found something of interest or entertainment.

Be well until next I blog.